Today I'm at WWW2004 in New York again, I'll be here through Saturday, and live updates will continue as long as my laptop battery and the wireless network hold out. There may be fewer photos today, though, since I forgot to charge up my camera battery last night. :-(

By the way, if anyone wants to post notes from the sessions I can't attend (they're about six running in parallel) I'll be happy to post them here.

Thursday kicks off with keynotes by Udi Manber of Amazon.com and Rick Rashid from Microsoft. Apparently this morning is a joint session with the ACM SIG-ECOM. I guess there's an e-commerce conference running simultaneously.

Rashid's nominal topic is "Empowering the Individual". "Scientists and engineers don't create the future. We create the physical and intellectual raw materials from which the future can be built." He's mostly telling us things we already knew, including that the Internet squashed Microsoft's vision of the Information superhighway as effectively as an 18-wheeler squashes an armadillo (my metaphor, not his). Plus he's said several things that sound great, but that Microsoft is actively working against, notable sharing what you want, when you want, with who you want. He's feeding us a line of absolute crap about a virtual telescope that just doesn't fit with how astronomy really works. There may be something valuable in what he's referring to, but there's no way it's anything like what he's saying.

He's talking about people sharing with each other. He's not (yet) talking about sharing with big corporations, telemarketers, law enforcement, etc. Something called Wallop is Microsoft's response to blogging/Friendster. They add rich multimedia information. It involves a dynamically computed social network.

Moving on to storage. What happens when the individual has a terabyte of storage? Today, it costs about $1000. That's a lifetime of conversation or a year of full-motion video. (I'm not sure those numbers add up. One DVD is five gigs and that's only two hours). Stuff I've Seen: archive everything you've ever looked at on the Web, all e-mail (from Outlook), and everything in your Documents folder. The logical extreme is the SenseCam (Lyndsay Williams, et al), a gargoyle? in Neal Stephenson's terminology (Snowcrash) that records everything you see and hear. Well, not quite everything. The current prototype only captures 2000 still images a day. Image captures can be triggered by various triggers. It uses a fisheye lens, which is a bad idea (IMO). A regular lens that focuses on what I'm looking at would make a lot more sense. I'm generally not very interested in what happens 90° away from where I'm looking. Of course, this raises privacy issues. Can the government subpoena this stuff? Of course. They can subpoena personal diaries now.

Anyone know of a good audio recording package for Mac OS X that allows me to record lectures straight from my PowerBook? Maybe iMovie can do it? Hmm, it's complaining about the disk responding too slowly. It also seemed to stop recording after about 14 seconds. And the audio quality is pretty low. Pradeep Bashyal and Stuart Roebuck both suggested Audio Recorder. Downloading it now. At first glance, it seems to work, and it's nice and simple. It takes about 1 meg a minute for MP3 recording. After the session is over I'll have to see if it actually recorded anything. David Pogue suggests Microsoft Word 2004.

Udi Manber of Amazon/A9 isn't allowed to talk about other companies or what they're working on now by Amazon policy. (Someone needs to buy a ticket on the cluetrain.) They aren't trying to compete with Google. Some observations about search:

Bottom line: search is hard.

He's talking about search inside the book. They scanned all the books themselves. They originally planned to use screensavers to do the OCR, but they found idle disaster recovery machines to do the work. About half the audience here today has tried this (according to a quick show of hands). Edd (on IRC) suggests ego-surfing inside the book.

A9 can remember searches over time, and the new results called out. Can annotate pages with a diary using the A9 toolbar.

A pessimist sees the glass as half empty. An optimist sees the glass as half full. A engineer sees a glass that's twice as large as necessary. If the audio recording works so I can get the exact wording on that, and if it turns out not be by somebody else, that may be a quote of the day. Nope, appears to not be original.

What if everyone becomes an author?

For the first parallel sessions this morning I think I'm going to return to the W3C track for Semantic Web, Phase 2: Developments and Deployment. Hopefully I can snag an electrical outlet since my battery is running down. If not, you may not hear from me till lunch.

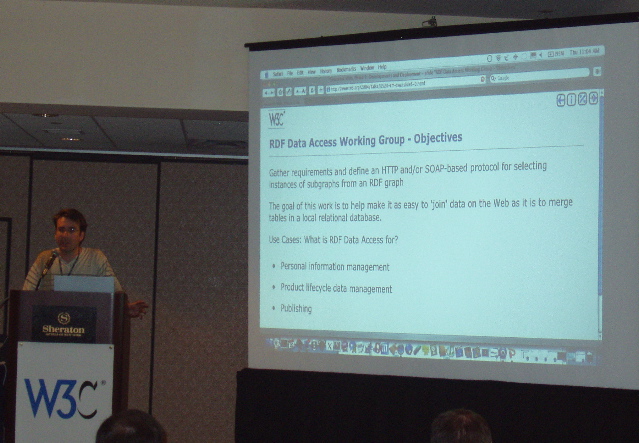

Eric Miller is delivering a Semantic Web Activity Update. (When looking at the notes, just cancel the username/password dialog and you'll get in.) The RDF working group charter will expire and not be renewed at the end of the May. Application work will continue. The RDF Data Access Group led by Dan Connolly is moving quickly and will publish its first public draft soon. The Semantic Web Best Practices and Deployment Working Group provides "guidance, in the form of documents and demonstrators, for developers of Semantic Web applications." Several other individuals are now going to demonstrate examples of deployment beginning with Chuck Meyers.

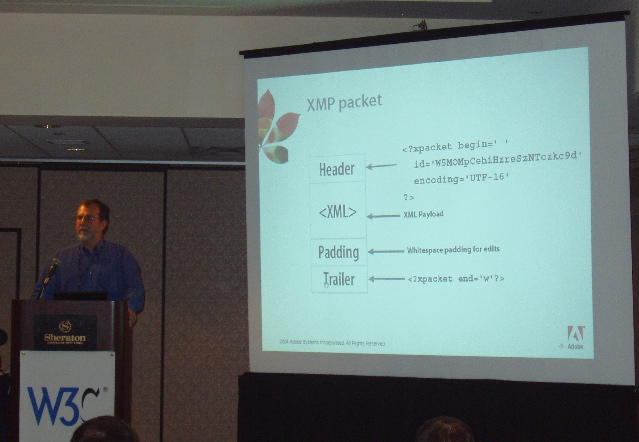

Chuck Meyers from Adobe says the latest Adobe publishing products are all RDF enabled using the Extensible Metadata Platform (XMP). The problem is someone has to enter the metadata to go with the pictures, but they do import Exif data from digital photos. (Now if only my digital camera would stop forgetting the date.) This work goes back six years. The toolkit is open source, and 3rd party ports have expanded platform support. Going open source is unusual for Adobe. XMP uses processing instructions?!? for a lot of content. It's not clear why they don't use plain elements. I couldn't see what RDF brought to this party that you can't get from regular XML.

Frank Careccia from Brandsoft is talking about Commercializing RDF. "RDF is to enterprise software what IP was to networking." Lack of ownership of corporate web sites is a problem. There's no control. He sees this as a problem rather than a strength. He wants to present one unified picture of the company to the outside world, rather than letting the individual people and departments explain themselves. Another person who needs a ticket on the cluetrain.

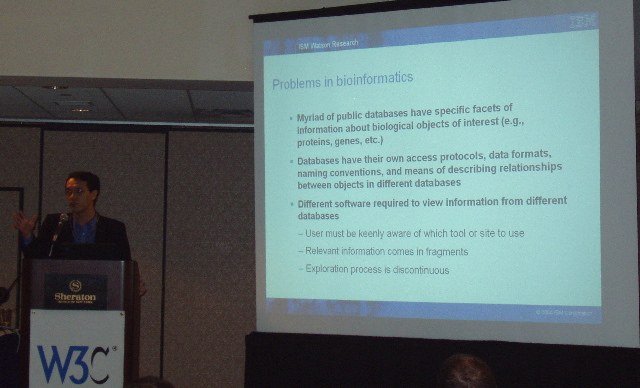

Dennis Kwan from IBM is talking about BioHaystack; a gateway to the Biological Semantic Web. They want to automate the gathering of data from multiple sites. LSID URNs are a common naming convention. The trick identifying the same objects across different databases. They want non-programmers to be able to use BioHaystack to be able to access these heterogenous biological data sources. So far this talk came closer than most SW talks to actually saying something. I felt like I could almost see a real problem being solved here, but still not quite. The talk was not concrete enough to show how RDF and friends actually solved a concrete problem.

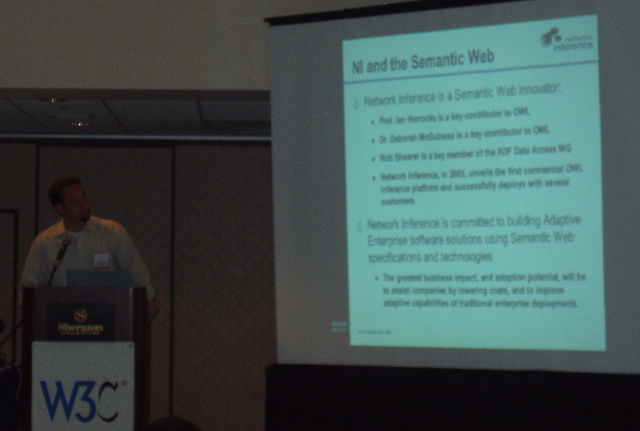

Jeff Pollock? from Network Inference is talking about the business case for the Semantic Web. Customers are not interested in Semantic Web solutions per se. They're looking for painkillers rather than vitamins, but he thinks SW is a painkiller that can reduce cost. RDF and OWL enable standard, machine interpretable semantics. XML enables only syntax. Or at least so he claims. I agree about XML, but so far I've yet to see evidence that there's more semantics in RDF/OWL than in plain XML.

There's a huge number of big words being tossed around this morning (business inferencing, .NET tier, dynamic applications, reclassify corporate data, proprietary metadata markup, "align the semantics of federated distributed sources", "rich, automatic, service orchestration", etc.) which mostly seems to obscure the fact that none of this does anything. OK, finally he talks about a use case of a chart of accounts problem for a Fortune 500 electronics corp. Reporting dollars for cameras vs.phones for cameras phones. OK. This is somewhat concrete, but I'd like to see more details.

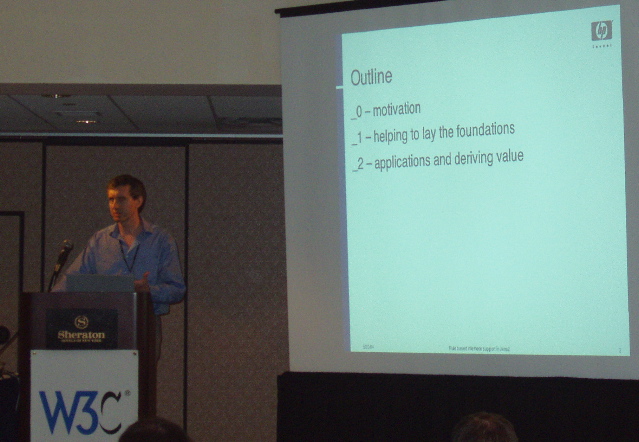

Finally Dave Reynolds from HP is talking about work at HP Labs. Jena is an open source semantic web framework. Joseki is Jena's RDF server. "There's no single killer app." They're investing in and exploring a broad range of applications: Semantic blogging, information portals, and SMILE Joint. He's describing several application areas, but again I don't see how RDF/OWL have anything to do with what he's talking about. I realized part of the problem: no one is showing any code. I feel like I'm a mechanical engineer in 1904 listening to a bunch of other engineers talks about airplanes, but nobody's willing to show me how they actually expect to get their flying machines into the air. Maybe they can do it, but I won't believe it until I see a plane in the air, and even then I really want to take the machine apart before I believe it isn't a disguised hot air balloon. A lot of what I'm hearing this morning sounds like it could float a few balloons.

Question from the audience: have any of you done any testing to make sure your products are interoperable?

For the first block of afternoon parallel sessions I headed down to conference room E, which apparently also has one of those annoying routers that lets you connect but doesn't provide DNS service. Bleah. Consequently you won't get to read these notes in real time, but at least I can spell check them before posting. One of the conference organizers is hunting down the network admins to see if they can fix it, so maybe you'll get to read these a little sooner, but I'm not holding my breath. I finally managed to connect to the wireless network in the next room over. I'm not sure how long this will work. This room's router still isn't working. Hmm, I think I just spotted it. I wonder what would happen if I just walked over and rebooted it?

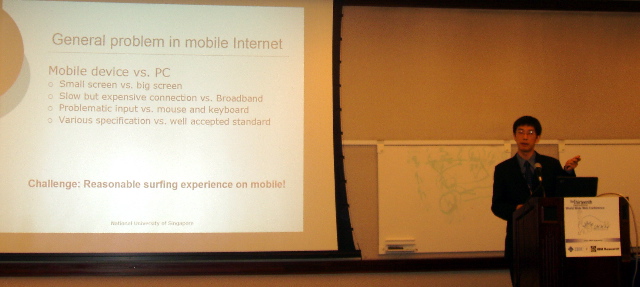

The first afternoon session is Optimizing Encoding, chaired by Junghoo Cho of UCLA. The first of three talks in this session is Xinyi Yin of the National University of Singapore on "Using Link Analysis to Improve layout on Mobile Devices." (Co-author: Wee Sun Lee). The challenge is too provide a reasonable browsing experience on a mobile device with small screen and low bandwidth. They use an idea similar to Google's page rank to select the most important information on the page, and present that most important content only (or first) on the small screen. That sounds very challenging and original. (The presenter does know of some prior art though, which he cites, even though I hadn't heard of it.) However, they weight elements' importance by the probability of the user focusing on an object and by the probability of the user's eye moving from that object to another object. Mostly this seems to be based on size with some additional weight given to the objects in the center, the width/height ratio. (Unimportant info like navigation bars and copyright info tend to be quite narrow), and the connection between words in the object and words in the URL. This is primarily designed for new sites like CNN.

The talk that sold me on the Optimizing Encoding session was IBM's Roberto J. Bayardo "An Evaluation of Binary XML Encoding Optimizations for Fast Stream based XML Processing." (Co-authors: Daniel Gruhl, Vanja Josifvoski, and Jussi Myllymaki) I suspect I'll hate it, but I could be wrong. My prediction for yesterday's schema talk proved to be quite off base. Maybe these guys will surprise me too. My specific prediction is that they'll speed up XML processing by failing to do well-formedness checking. Of course, they'll hide that in a binary encoding and claim the speed gain is from using binary rather than text. However, I've seen a dozen of these binary panaceas and they never really work the way the authors think they work. An XML processor needs to be able to accept any stream of data and behave reliably, without crashing, freezing, or reporting incorrect content. And indeed a real XML parser can do exactly this. No matter what you feed it, it either succeeds or reports an error in a predictable, safe way. Most binary schemes incorrectly assume that because the data is binary they don't need to check it for well-formedness. But as anyone who's ever had Microsoft Word crash because of a corrupted .doc file or had to restore a hosed database from a backup should know, that's not actually true. Binary data is often incorrect, and robust programs need to be prepared for that. XML parsers are robust. Most binary parsers aren't. The fact is when you pull well-formedness checking out of a text-based XML parser it speeds up a lot too, as many developers of incomplete parsers like ???? have found. Binary is not fundamentally faster than text, as long as the binary parser does the same work as the text based parser does. Anyway, that's my prediction for where this talk is going (I haven't read the paper yet.) In an hour, we'll see if my prediction was right.

He thinks XML is too heavyweight for high performance apps. (I completely disagree. I'm really having to bite my tongue here to let the speaker finish in his allotted small time.) both in parsing overhead and space requirements. "Bad side of XML is addressed by throwing away (much of) the good side." XML compression trades speed for small size. Also negatively impacts the ability to stream. Some parsing optimizations slow down the encoding. In other words they trade speed of encoding for speed of decoding. He's only considering single stream encodings. Strategies tested include:

Most of their evaluations are based on IBM's XTalk plus various enhanced versions of XTalk. (He seems to be confusing SAX with unofficial C++ versions. ) TurboXPath is a representative application. Two sample data sets: record-oriented (a big bibliography, 180MB) and more deeply nested collection of real estate listings grouped by cities (50 MB). Used Visual C+++ on Windows. Linux results were similar. They tested expat and Xerces-C. (Why not libxml2?) Their numbers show maybe a third to a half improvement by using binary parsing. expat was three times faster than Xerces-C. (on their first example query). On the second query, improvement is a little more, but in the same ballpark. Skip pointers are a significant improvement here. "Don't throw out plain XML if you haven't tried expat." "Java and DOM based parsers are not competitive."

It took till Q&A to find out for sure, but I was right. They aren't doing full well-formedness checking. For instance, they don't check for legal characters in names. That's why XTalk is faster. They claim expat isn't either (I need to check that) but there was disagreement from the audience on this point. But the bottom line is they failed to demonstrate a difference in speed due to binary vs. text.

The closing talk of this session is Jacqueline Spiesser of the University of Melbourne (co-author Les Kitchen) on "Optimization of HTML Automatically Generated by WYSIWYG programs" such as Microsoft Word, Microsoft Excel, Microsoft FrontPage and Microsoft Publisher. (The talk should really be titled "Optimization of HTML Automatically Generated by Microsoft programs.") This was inspired by an HTML train timetable that too 20 minutes to download over a modem.

Techniques:

Sizes went from 53% of original to 142% of original based on parsing and unparsing alone. Adding the factoring out of style classes brings this from 39% to 89% of the original sizes. Optimal attribute placement improves this again, but I'm not sure I'm reading the numbers in his table correctly. Optimal attribute placement is slow. Total of all four optimizations reduces size to 33% to 72% of the original size. 56% is the "totally bogus average on such a small data set." Their implementation was written in Haskell.

He suggests plugging this in as a proxy server or a stand-alone optimizer. However, to my way of thinking this needs to be fixed in Microsoft Word (and similar tools). Fixing it anywhere else will have a negligible impact.

For the second session block of the afternoon I was torn between XForms and

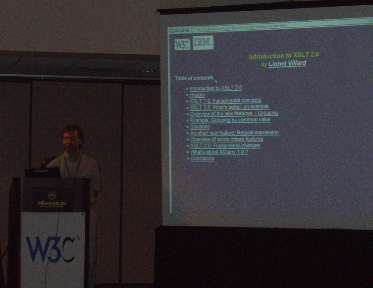

XML: Progress Report and new Initiatives; but I picked the latter to see what Liam Quin had to say about the progress of "Binary Interchange of XML." However, the session begins with Lionel Villard's inoffensive XSLT 2.0 tutorial. There don't seem to be any surprises here.

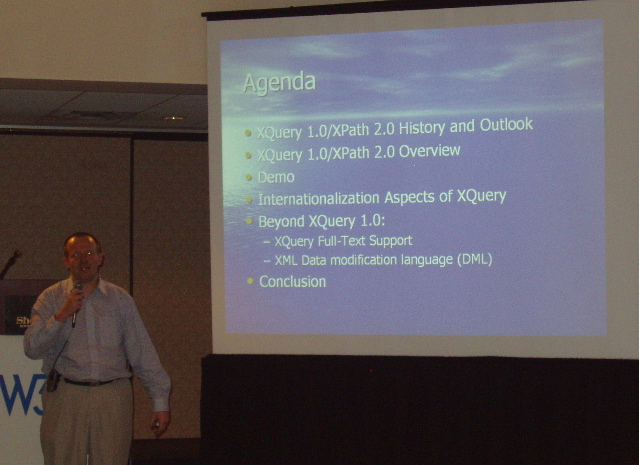

Michael Rhys, SQL Server program manager at Microsoft (which has recently pledged not to support XSLT 2) is now going to talk about XQuery 1.0 and XPath 2. XQuery was the largest working group up to that time. SOAP later surpassed it. "It's huge. There are functions in there I haven't even seen yet." There are over 1200 public comments; 50/50 editorial vs. substantive. "It probably takes another four years to get through with them. I hope not." Summer 2004 XQuery Full-Text language proposal published. Spring 2005 short last call period for XQuery 1.0/XPath 2.0. Plan to finish in 2005.

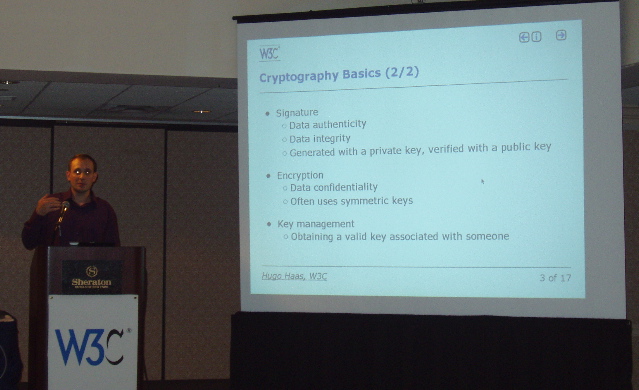

Next up the W3C's Hugo Haas is going to talk about XML Security; i.e. XML Signatures and XML Encryption. Nothing majorly new here; a brief overview of the existing specs.

Finally, the talk I came to hear: Liam Quin is talking about Binary Interchange of XML. "Wake up! This is the controversial stuff!" No wonder: less than a minute in and he's already going off the rails. He explicitly defines an XML document as some in-memory model that needs to be serialized with angle brackets. No! The angle brackets serialization is the XML. The in memory data structure is not. Apparently schema-based schemes for binary formats have been ruled out. That's good to hear. "You might lose comments or processing instructions"?!? That's shocking. These are in the infoset. Furthermore, he really wants to bring in binary data; not just binary encoded XML text but real binary data for video or mapping data. This is a big extension of the XML story. It really adds something to the Infoset that isn't there today. What's being proposed is a lot more than a simple binary encoding for XML. It is a superset of the XML model, not an encoding of it! This is not even Infoset or XML API (SAX, DOM, XOM, etc.) compatible in any meaningful sense. I know you could always encode everything in Base-64 but that would eliminate the speed and size advantages this effort is trying to achieve. (David Booth suggests doing this with unparsed entities? Would that work? I need to think further on this.) This is worse than I thought. However, there may be hope. According to the notes, the working group "has a One-year charter; if they can't make a case by then, the case isn't yet strong enough." The clock started ticking three months ago.