Day 3 of Extreme kicks off with the W3C's Liam Quin (who does win the weirdest hat award for the conference) talking about the status of binary XML, though he's careful not to use the phrase "binary XML" since that's a bit of an oxymoron.

The W3C recently published XML Binary Characterization Use Cases. I haven't had the time to read through all the use cases yet, but what I've skimmed through so far is not compelling. I'll probably read through this and respond when I get back to New York next week.

Last night, Liam told me the W3C has not decided whether or not this effort makes sense. They're worried that if they don't do anything, somebody else may or, worse yet, a hundred different people may do a hundred different things. It's not clear that one format can solve everybody's use cases, or even a majority of the use cases. However, I get the impression that the W3C is convinced that if one format would solve a lot of use cases, they'd like to do it.

This would be a horrible mistake. Of course, there are legitimate needs for binary data; but nothing is gained by polluting XML with binary data. Text readability is an important corner stone of XML. It is a large part of what makes XML so interoperable. XML does what it does so well precisely because it is text. Adding binary data would simply bloat existing tools, while providing no benefit to existing applications. To the extent there's a need for binary data, what's needed are completely new formats optimized for different use cases. There is no call for a single uber-format that tries to be all things to all people, and likely ends up being nothing to no one.

C. Michael Sperberg-McQueen is talking about "Pulling the ladder up behind you", asking whether we're resisting an attempt to resist a hostile takeover of the spec or pulling up the ladder behind us. He doesn't know the answer, but I do. We are resisting a hostile takeover. Other groups are free to build other ladders that fit their needs. He mentions that there was a binary SGML effort at one point. It's not clear how far it got. Anyone know what happened to it?

Liam Quinn: "We're all sisters in the church of XML," and he's challenging the orthodoxy of that church. Once a week someone contacts him and says they need binary XML, but these people often don't know what they mean by "binary XML." Liam says, they're not looking at an incompatible document format; just different ways to transfer the regular XML documents. (I disagree, and I think his statement's more than a little jesuitical. Most of the proposals clearly anticipate changing the infoset and APIs. If the W3C is ruling out such efforts, they should state that clearly.)

Various goals for binary XML:

Cell phones and other micro devices are an important use case. However, as I discussed with Liam last night, there's a question whether these will still be small enough for this to be a problem by the time any binary XML spec were finished. Someone in the audience mentions that at least one cell phone now has a gigabyte of memory (though that serves double duty as both disk and RAM). Moore's law may solve this problem faster than a spec can be agreed on and implemented, though.

The W3C is not interested in hardware-specific, time-sensitive, or schema-required formats. Encryption is not a goal.

Costs of binary XML:

"WAP stands for totally useless protocol."

Benefits:

Me: 1 is disingenuous. Redefining XML as something new does not truly expand the universe of what's done with XML. 2 is done today with text XML. It's not clear binary XML is necessary. There are some size issues here for some documents, but these use cases are very well addressed by gzip. 3 is a very good idea, but has nothing to do with XML. It could be done with a non-XML format.

Liam: The question is not whether we have binary XML-like formats or not. The question is whether we have many or few. Me: It sounds like there's a real control issue here. The W3C gets very nervous with the idea that somebody besides them might define this. Even if they decide it's a bad idea, they'd rather be the one to write the spec than letting someone else do it. They do say a lot of people have come to them asking for this. They did not initiate it.

Side note: In Q&A Liam misunderstands web services. He sees it as an alternative to CORBA/IDL rather than an alternative to REST, and therefore thinks it's a good thing.

This has been a few quick notes typed as I listened. I'll have more to say about this here in a week or so.

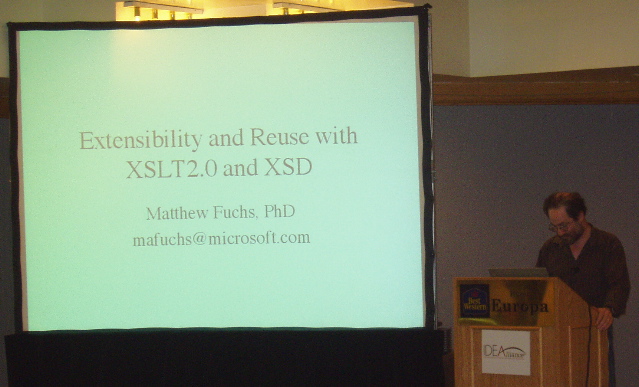

The second, less controversial topic of the morning, is Microsoft's

Matthew Fuchs'

talk on Achieving extensibility and reuse for XSLT 2.0 stylesheets.

He's a self-avowed advocate of object orientation in XML. He's going to talk about how to use the OO features of the W3C XML Schema Language in conjunction with XSLT2 (especially XPath 2.0's element() and attribute() functions to achieve extensibility in the face of unexpected changes.

Stephan Kesper is presenting a simple proof that XSLT and XQuery are Turing complete using μ-recursive functions. There is no concept known that is more powerful for computation than a Turing machine. It is believed but not proved that no such more powerful concept of computation that exists. On the other hand, what exactly a "concept of computation" means is not precisely specified, so this is uncertain.

His technique requires stacks, which he implements using concat(), substring-before(), and substring-after(). Hmm, that seems a bit surprising. I don't think of these functions as a core part of XSLT. I wonder if the proof could be rewritten without using these functions? Kesper thinks so, but the proof would been much more complex.

The XQuery proof is much simpler, because XQuery allows function definition.

He really hates XSLT's XML-based syntax. He much prefers XQuery. He thinks (incorrectly IMO) that XSLT is machine oriented and XQuery is more human oriented.

In Q&A Norm Walsh, thinks the proof depends on a bug in Saxon

(Kesper disagrees). The question is whether it's legal to dynamically choose the template name to be called by xsl:call-template; i.e., is the name attribute of xsl:call-template an attribute value template or not?

The audience can't decide. Hmm, looking at the spec, I think Norm is right, but Dimitre Novatchev may have presented a workaround for this last year so the flaw in the proof may be fixable.

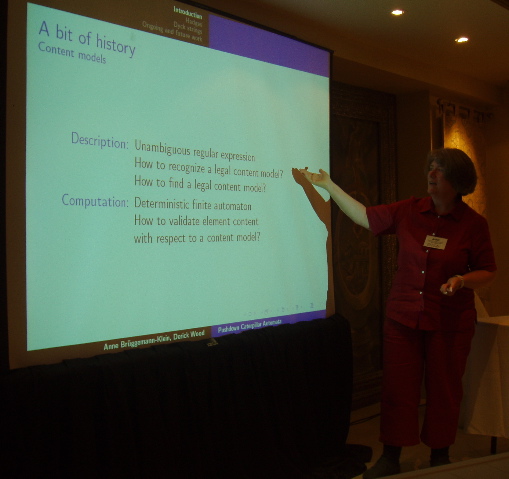

I could follow most of the last paper, barely. This next one, "Balanced context-free grammars, hedge grammars and pushdown caterpillar automata", by Anne Brüggemann-Klein, (co-author Derrik Wood) may run right past my limits so take what I write with a grain of salt. She says the referees made the same comments. According to the abstract,

The XML community generally takes trees and hedges as the model for XML document instances and element content. In contrast, computer scientists like Berstel and Boasson have discussed XML documents in the framework of extended context-free grammar, modeling XML documents as Dyck strings and schemas as balanced grammars. How can these two models be brought closer together? We examine the close relationship between Dyck strings and hedges, observing that trees and hedges are higher level abstractions than are Dyck primes and Dyck strings. We then argue that hedge grammars are effectively identical to balanced grammars, and that balanced languages are identical to regular hedge languages, modulo encoding. From the close relationship between Dyck strings and hedges, we obtain a two-phase architecture for the parsing of balanced languages. This architecture is based on a caterpillar automaton with an additional pushdown stack.

She's actually only going to talk about hedges, and omitting the Dyck strings to make the talk easier to digest. A hedge differs from a forest in that the individual trees are placed in order. In a forest, the trees have no specified order.

In DTDs element names and types are conflated.

I wish the conference put the papers online earlier. They should be up after the conference is over, but for these more technical talks it would be really helpful to be able to read the paper to clarify the points, not to mention I could link to them for people reading remotely who aren't actually here.

This conference has good wireless access. I've also recorded a few talks for later relistening using my laptop's built-in microphone. It makes me wonder if it might be possible to stream the conference out? I suspect the organizers might have something to say about that, but it would be interesting to webcast an entire conference in realtime, not just an occasional keynote.

The afternoon sessions split between the data-heads and the doc-heads. Since I have feet planted firmly in each camp, I had some tough choices to make but I decided to start with the docheads and TEI. Lou Burnard is talking about "Relaxing with Son of ODD, or What the TEI did Next" (co-author Sebastian Rahtz). According to the abstract,

The Text Encoding Initiative is using literate schema design, as instantiated in the completely redesigned ODD system, for production of the next edition of the TEI Guidelines. Key new aspects of the system include support of multiple schema languages; facilities for interoperability with other ontologies and vocabularies; and facilities for user customization and modularization (including a new web-based tool for schema generation). We'll try to explain the rationale behind the ongoing revision of the TEI Guidelines, how the new tools developed to go with it are taking shape, and describe the mechanics by which the new ODD system delivers its promised goals of customizability and extensibility, while still being a good citizen of a highly inter-operable digital world.

TEI v5 is a major update. They're using Perforce to manage the content management system, and are experimenting with switching to eXist. They chose RELAX NG for the schema language. "DTDs are not XML, and need specialist software." W3C XML Schema Language is much too complex. They use Trang to convert the RELAX NG schemas.

At this point, lunch began to disagree with me so I had to make a quick exit back to my room and missed the rest of the talk. :-( I really shouldn't have had that second dessert. But in the elevator on the way up, I did consider how many projects are switching to RELAX NG. TEI is just the latest victory. Others include DocBook, XHTML 2, and SVG.

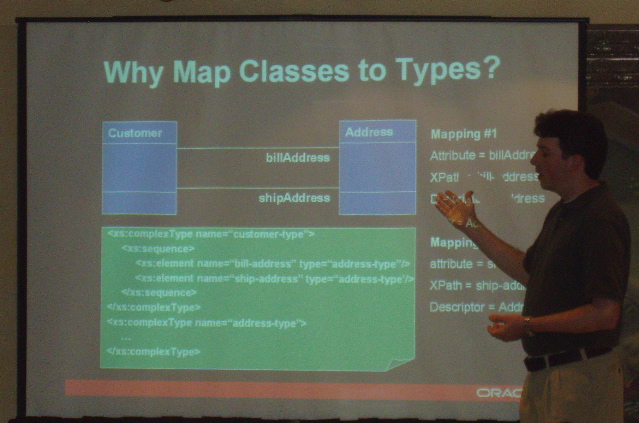

Blaise Doughan of Oracle (co-author Donald Smith) is talking about Mapping Java Objects to XML and Relational Databases. Michael Sperberg-McQueen objects to classifying Oracle as a relational database in his introduction to the talk.

So far this looks like another W3C XML Schema Language based Java-XML data binding system. The difference from JAXB is that they use XPath to define the mappings rather than element names. A question from the audience points out that they've made the classic database-centric mistake of ignoring arity problems (i.e. what happens when an element contains multiple child elements of the same type/name). They do support position based mapping. The demo crashed.

The final session of the day is Norm Walsh talking about DocBook, particularly the redesign of DocBook in version 5.0 using RELAX NG. This is called DocBook NG. There's currently an experimental "Eaux de Vie" release. "DocBook is my hammer." DocBook has about 400 elements, about half of which have mixed content and almost none have simple content. It is a DTD for prose.

It was originally designed as an exchange format, but today it's pretty much just an authoring format. DocBook has grown by accretion. Thus, it's time to start over. Plus the DTD fails to capture all the constraints. They'll use Relax NG. Trang can convert to W3C XML Schemas, but Trang isn't smart enough to produce the DTD.

RELAX NG patterns allow DocBook NG to combine elements (e.g. one info element instead of book.info, chapter.info, article.info, etc.) while still allowing different content models in different places.

DocBook NG has only 356 elements.

He also like's RELAX NG's & connector for unordered content. Co-constraints are useful too. This all allows him to untangle the conflicting CALS and HTML table models. (Wouldn't namespaces be a better fix here?)

A few elements such as pubdate benefit from RELAX NG data types (but this is arguable).

For what RELAX NG can't specify, they'll use a rule-based technology such as Schematron. This could implement an exclusion.

Traditionally the version of DocBook has been identified by the public ID. This no longer applies, so a version attribute is needed.

This is also useful for referential integrity constraints, such as a footnoteref points to a footnote. They can embed Schematron in a RELAX NG schema.

Ease of use, including ease of subsetting and extending, is crucial. Migration issues are also important. XSLT helps. His XSLT stylesheet to convert covers about 94% of the test cases.

He doesn't think the W3C XML Schema language is the right abstraction for schemas that have lots of mixed content such as DocBook. John Cowan: "XML DTDs are a weak and non-conforming syntax for RELAX NG".