Extreme Markup Languages 2004 is set to get underway in about 45 minutes. It appears the wireless access does work in the conference rooms where I'm typing this so expect live reports throughout the day. As usual when I'm updating in close to real time, please excuse any spelling errors, typos, misstatements, etc. I also reserve the right to go back and rewrite, correct, and edit my remarks if I misquote, misunderstand, or misparaphrase somebody.

I'm pleased to see the conference has put out lots of power strips for laptops. And in a stroke of genius, they're all in the first three rows so people have to come to the front of the room to get power rather than hiding in the back. :-)

Over 2000 messages piled up in my INBOX since Saturday. 29 of them weren't spam. It didn't help that IBiblio recently decided to start accepting messages from servers that don't follow the SMTP specs correctly. Since I'm on my laptop I'm logging into the server and scanning the headers manually with pine, rather than using Eudora and my normal spam filters. This probably makes me more likely to accidentally delete important email, especially since pine occasionally confuses my terminal emulator when faced with the non-ASCII subject lines of a lot of spam, so I don't always see the right headers. If you're expecting a response from me, and don't get one, try resending the message next week.

I'm typing this on my PowerBook G4 running Mac OS X. This means a lot of the Mac OS 9 AppleScripts I use to do things like change the quote of the day and increment the day's news don't work. I expect to be upgrading my main work system to Mac OS X in the next month or two (depending on when Apple finally ships the G5 I ordered a month and a half ago) so I really need to update all these scripts to work with Mac OS X. Right now this is all done with regular expressions and baling wire. The only excuse I can offer for this is that these are legacy scripts that were originally developed for Cafe au Lait in the pre-XML years. However, the regular expressions package doesn't work in Mac OS X, and as long as I'm rewriting this anyway I might as well base it on XML. Does anyone know of a good XML parser for Mac OS X that's AppleScriptable? So far the only one I've found only runs in the classic environment. could probably teach myself enough about Mac programming in Objective C to write an AppleScript wrapper around Xerces, but surely someone's done this already?

B. Tommie Usdin is giving the keynote address, "Don't pull up the ladder behind you." From the abstract it sounds like she may be targeting me (among others):

The papers submitted for Extreme Markup Languages in 2004 were remarkable in a number of ways. There were more of them than we have ever received before, and their technical quality was, on the whole, better than we have received before. More were based on real-life experience and more used formalisms. Also new this year, however, was a tendency to identify, poorly characterize, and then attack a specification, technology, or approach seen as competition to the author’s. I saw evidence of the implicit assumption that there is limited space for XML applications and specifications and that success of one limits the opportunity for success for another. It is this attitude that leads to “specification poaching” and a variety of other anti-social behaviors that can in fact hurt us all. I believe that XML-space is in fact not limited any more than, for example, UNICODE-space is limited. We can and should learn from each other’s requirements, applications, and implementations. Pulling up that ladder after yourself in this environment hurts not only the people who want to come after you, it hurts you by limiting your access to new ideas, techniques, and opportunities.

I definitely do target some other technologies in my presentation on XOM. However, it's not because I think there's only room for one spec in a space. It's because I think some of the competitors are actively harmful in a number of ways. 20 competing APIs may be a good thing, but only if all 20 of the APIs are good when considered in isolation. One of the nice things about having a plethora of APIs is we can differentiate the good from the bad, and throw away the bad ones. When there's only one API we're stuck with it, and we may not even realize how bad it is, (*cough* DOM *cough*). 15 bad APIs and 5 good ones just confuses everyone and costs productivity. It's better to pick and choose only the technologies that actually work. But I'll get my own 45 minutes to argue this point tomorrow. :-)

Usdin is talking now. She says there were some problems with MathML and internal DTD subsets in the paper submissions, plus one person who insisted that XML was only for bank transactions and it was ridiculous to write conference papers in anything other than Word. MathML may be ready for prime time but is not yet ready for amateur use. She believes in shared document models and doesn't like when people make up their own markup, even when the document submitted is in fact valid. In other words, she doesn't like internal DTD subsets; because this really breaks the whole notion of validity. You can't easily check the validity of a document against the external DTD subset without using the internal DTD subset too. Me: Interesting. I've known the internal DTD subset was a major source of problems for parser implementers for a long time, but it seems to be a problem for users, even experienced users, too. More evidence that XML made the wrong cut on how parsers behave. Parsers should be able to ignore the internal DTD subset, but the spec doesn't allow this

Now she's talking about pulling up the ladder behind us. She seems mostly focused on different XML applications (vocabularies) rather than libraries and APIs.

She compares this to Unicode. Unicode doesn't try to stop other people from using Unicode. I note that Unicode does actively try to stop competing character encodings though. Overall though, it sounds like I might have misguessed where this talk is going. Her objection is to overspecifying the applications of XML, not the core technologies on which these applications build.

James David Mason (co-author Thomas M. Insalaco) of the Y-12 National Security Complex are talking about "Navigating the production maze: The Topic-Mapped enterprise." According to the abstract, "A manufacturing enterprise is an intricate web of links among products, their components, their materials, and the facilities and trained staff needed to turn materials into components and completed products. The Y-12 National Security Complex, owned by the U.S. Department of Energy, has a rather specialized product line, but its problems are typical of large-scale manufacturing in many high-tech industries. A self-contained microcosm of manufacturing, Y-12 has foundries, rolling mills, chemical processing facilities, machine shops, and assembly lines. Faced with the need to maintain products that may have been built decades ago, Y-12 needs to develop information-management capabilities that can find out rapidly for example, what tools are needed to make parts for a particular product or, if tools are replaced, which products will be affected. A topic map, still in preliminary development, reflects a portion of Y-12's web of relationships by treating the products in detail, the component flows, and the facilities and tools available. This topic map has already taught us new things about Y-12. We hope to extend the topic map by including other operational aspects, such as the skills and staffing levels necessary to operate its various processes."

Legacy issues are a big deal for them. They need to be able to resume production on very old equipment with a 36 month lead time. They need to be able to rebuild anything, even though original suppliers like Dupont may not make the components anymore. "Fogbank is a real material, but I can't tell you what it is." "They'd shoot me if I told you what Fogbank is." (I suspect it's made of highly enriched uranium, though. It also appears to be producing lots of Tetrachloroethylene and K-40. Ain't Google wonderful?) Topic maps integrate the data. They help people understand the data, and helps find errors in the existing data. Naming issues (e.g. oralloy vs. enriched uranium) are a big deal. They need to be able to unite topics that go by different names. Access to the data is an as yet unsolved issue, and needs to be added to the ontology. They want to show different associations depending on credentials.

He's used XSLT on HTML tables generated by Excel to create topic maps (among other things).

Next up are Duane Degler and Renee Lewis talking about "Maintaining ontology implementations: The art of listening." They do government work too. They're giving an example of shopping for a car. They seem to be interpreting the inability to find what they want as a metadata problem. I suspect it's more of a user interaction problem. Specifically, web sites tend to be designed according to the mental model of the publisher, rather than the mental model of the reader. This is why you can search for cars by model number but not by four-seat convertible. Auto dealers think about model numbers. Consumers think about features. But they don't believe that. They want to listen to the users' questions to evolve the language and refine the topic maps Specifically they want to monitor search requests, Q&A requests, threaded discussions, etc. Lewis says, "Topic map versioning we put on the back burner because it looked big and ugly." It sounds like a good idea overall, but it's a little light on the practical specifics. User buy-in and management approval is a big problem.

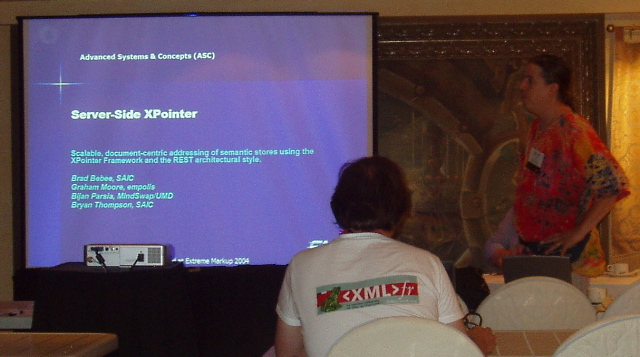

The afternoon sessions split into two tracks. I'm skipping topic maps for Bryan Thompson talking about "Server-Side XPointer" (Co-authors: Graham Moore, Bijan Parsia, and Bradley R. Bebee) He wants to relate core REST principles to linking and document interchange. RESTful interfaces should be opaque. You shouldn't be able to see what's behind the URI. Generic semantics based on request method and response codes.

POST to create a resource and PUT to update one?!? That sounds backwards. XPointer is not just XPath. It is extensible to new schemes. Clients don't need to understand these schemes! It's enough that the server understand them! Now that he's said it, it's obvious. I don't know why I didn't see this before. It makes XPointers much more useful. Use range-unit field in HTTP header to say "xpointer". The server responds with 206 Partial Content. Hmm, this does require some client support. He doesn't like the XML data model and XPath for addressing. These aren't extensible enough. We need to use more application specific addressing schemes and content negotiation.

The next talk has Sebastian Schaffert giving a "Practical Introduction to Xcerpt" (co-author François Bry). He says current query languages intertwine querying with document construction which makes them unsuitable for reasoning with meta-data, a la the Semantic Web. He uses an alternate syntax called "data terms" that is less verbose than XML, and not a strict isomorphism because they want to research alternate possibilities.

Jeremy J. Carroll (co-author Patrick Stickler) is talking about RDF Triples in XML

(TriX). RDF can be serialized in XML but is not XML, and is not about markup. The graph should be obvious, more N-triples than RDF/XML.

It uses uri, plainLiteral, id, and typedLiteral elements, all of which contain plain text. A triple element contains a subject, predicate and object.

(Order matters.) The predicate must be a uri, but the subject can be a literal. A graph contains triples and can be named with a uri. A trix can contain multiple graphs. Interesting idea: XSL transform can be applied before RDF validation to transform document into the form to be validated. That's it. This seems much simpler than RDF/XML.

In the final regular session of the day, Eric Miller (co-author C. M. Sperberg-McQueen) is talking about "On mapping from colloquial XML to RDF using XSLT". This is about schema annotation. Two kinds of data integration:

He's talking about the second type. Apple's plist format is really nasty. But it works for Apple. Use XSLT to transform to what we want, but this data is too valuable to be sitting in make files, so put it in the schemas.