Memo to self: I really need to update the scripts used to edit this site so they use a real XML parser instead of regular expressions, which don't even work on my PowerBook anyway. The only excuse I have for this bogosity is that these scripts predate XML by a year or two.

Second memo to self: I have to figure out whether it's BBEdit on Mac OS X, rsync, or something else that keeps corrupting all my UTF-8 files when I move them from the Linux box to the Powerbook.

Day 2 of XML Europe, More stream of conscienceness notes from the show, though probably fewer today since I also have to prepare for and deliver my own talk on SAX Conformance Testing. I'll put the notes, paper, and software for that up here next week when I return to the U.S., and have the time to discuss it on various mailing lists.

Memo to conference organizers: open wireless access at the conference is a must in 2004. If the venue won't allow this, find another venue!

Memo to conference attendees: ask the conference if they provide open wireless access. If the conference doesn't, find another conference!

Having wireless access radically changes the experience at the conference. It enables many things (besides net surfing in the boring talks). Live note taking and Rendezvous enable the audience to communicate with each other and comment on the talks in real time without disturbing others. When you're curious about a speaker's point, it's easy to Google it. Providing wireless access makes the sessions much more interactive.

The morning began with a session entitled, "Topic Maps Are Emerging. Why Should I Care?" Unfortunately the question in the title wasn't reallly answered in the session. I've been hearing about topic maps for years, and have yet to see what they (or RDF, or OWL, or other similar technologies) actually accomplish. What application is easier to write with topic maps than without? What problem does this stuff actually solve? All I really want to hear is one or two clear, specfic examples and use cases. So far I haven't seen one.

Next Alexander Peshkov is talking about a RELAX NG schema for XSL FO.

After some technical glitches, Uche Ogbuji is talking about XML good practices and antipatterns in a talk entitled "XML Design Principles for Form and Function". Subjects include (I love these names)

java element

He doesn't like "hump case" (camel case).

Using attributes to qualify other attributes is a big No-No. If you're doing this, you're swimming upstream. You should switch to elements.

Envelope elements (company contains employees contains employee; library contains

books contains book) makes processing easier; but not always. Use them only if they really represent something in the problem domain, not just to make life easier for the processing tools.

Don't overuse namespaces. He (unlike Tim Bray) likes URNs for namespaces, mostly to avoid accidental dereferencing. He also suggests RDDL. He suggests "namespace normal form" Declare all namespaces at the top of the document. Do not declare two different prefixes for the same namespace.

A very good talk. I look forward to reading the paper. FYI, he's a wonderful speaker; probably the best I've heard here yet. (Stephen Pemberton and Chris Lilley were pretty good too.) Someone remind me to invite them to SD next year.

Componentize XML. Avoid large (gigabyte) documents.

Be wary of reflex use of data typing. Pre-packaged data types often don't fit your problem.

"Enforce well-formedness checks at every application boundary."

Forget "Binary XML." Use gzip. "The idea of binary XML flies in the face of all the concepts that make XML work."

The acetominophen paracetamol acid test

for markup vocabularies: Show a smaple document to a typical XML-aware but non-expert user. Does it give them a headache?

Next up is Brandon Jockman of Innodata Isogen on "Test-Driven XML Development". Hmm, the A/V equipment in this room seems to be giving everyone fits today. It worked well yesterday. This does not bode well for my presentation this afternoon.

One thing I'm noting in this and several of the other talks is that in a mere 45-minute session the traditional tripartite outline structure (tell your audience what you're going to tell them, tell them, and then tell them what you told them) doesn't really work. There's not enough time to do it, nor is the talk long enough that it's necessary. At most summarize the talk in one sentence, not even an entire slide. In fact the title of the talk (if it isn't too cute) is often a sufficient summary.

"XSLT gives you a really big hammer to hit yourself with."

He suggests using Eric van der Vlist's

XSLTunit for writing XSLT that tests XSLT.

Also recommends XMLUnit for the .NET folks.

I should look at this to see if they're any good ideas

here I can borrow for XOM's XOMTestCase class.

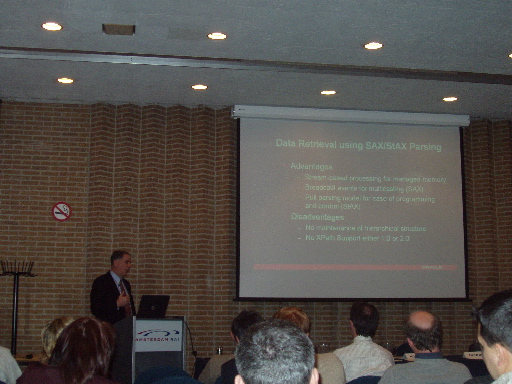

Mark Scardina, "owner" of Oracle's XML Developer Kit, is talking about "High Performance XML Data Retrieval"

XPath is the preferred query language, apparently because of its broad support in different standards like DOM, XSLT, and XQuery.

The DOM Working Group is finished and will not be rechartered. DOM Level 3 XPath is limited to XPath 1.0. Multiple XPath queries require multiple tree traversals (at least in a naive, non-caching implementation -ERH).

High performance requirements include managed memory resources, even for very large (gigabyte) documents. This requires streaming, but SAX/StAX aren't good fits. Also need to handle multiple XPaths (i.e. XPath location paths) with minimum node traversals. Knowing the XPath in advance helps. Will not handle situation where everything is dynamic. This must support both DTDs and schemas (and documents with neither).

These requirements led to "Extractor for XPath." This is based on SAX, for streaming and multicasting support. First you need to register the XPaths and handlers. This absolutizes the XPaths. Then Extractor compiles XPaths. This requires determining whether or not the XPath is streamable. Can reject non-streamablee XPaths. It also builds a predicate table and an index tree.

"XPath Tracking" maintains XPath state and matches document XPaths with the indexed XPaths. XPath is implemented as a state machine implemented via a stack. It uses fake nodes to handle /*/ and //. Output sends matching XPaths along with document content. Henry Thompson seems skeptical of the performance of the state machine. He thinks a ?bottom-up parser? might be much faster. I really don't understand this. I'm just copying Scardina's notes.

I ran all the way across the convention hall carrying my sleeping laptop,

something which I hate to do,

(Has anyone noticed that age is directly correlated to the care one takes of computer equipment?

I am amazed at how cavalierly the students at Polytechnic treat their laptops.

I suspect it involves both the cost and fragility of computers when one first learned

to use them. At the rate we're going, children born this year will be laying hacky-sack with their

laptops in the school yard.) to catch

Sebastian Rahtz talking about

"A Unified Model for Text Markup: TEI, DocBook, and Beyond."

The "Beyond" part inlcudes includes other formats like HTML and MathML.

The main purpose of this seems to be to allow

DocBook to be used in TEI and vice versa, for elements that one has that the other has

no real equivalent; e.g. a DocBook guimenu element in a mainly TEI document.

This is done with RELAX NG schemas. He recommends David Tolpin's RNV parser

and James Clark's emacs mode for XML.

That's it for today. I'm going to wander into the park behind the convention center to see if it looks like a good site for some birding. Come back tomorrow for updates from the final day of the show.