Today I'm at WWW2004 in New York, I'll be here for the next four days. Fortunately there's wireless access here (in fact, there are several wireless networks) so I'll be reporting live. There don't seem to be a lot of PowerBooks here, but I should probably finally figure out how to use this iChat thingie to see if anyone else is chatting about this live. Wasn't there an O'Reilly article about this a year or so ago? Anyone have a URL? There don't seem to be a lot of power plugs here, though. This may grind to a halt in about two hours if I can't find a place to plug in.

Actually, it looks like people are using IRC. xmlhack (may not be dead yet) is running an IRC channel collecting various comments about the show. "To join in, point your IRC client at irc.freenode.net's #www2004 channel. The channel is publicly logged." I tried to login, but Mozilla's IRC client froze on me. It looks like Mozilla 1.7 RC 2 has just been posted. I'll have to try that. OK, that seems to work. The chat is being logged for anyone who can't connect, or who just wants to read. 34 users online as of 3:00.

I'm doing something I don't normally do, editing this file directly on the live site, so there may be occasional glitches and spelling errors (or more than usual). I'll correct them as quickly as I can. Hmm, I already see one glitch. The non-ASCII characters got munged by BBEdit (again!). Can't we just all agree to use UTF-8, all the time? I'll fix it as soon as I get a minute. Also I reserve the right to change my mind and delete or rewrite something I've already written.

Scanning the papers, I now understand why my XOM poster was rejected. I submitted the actual poster instead of a paper about the subject of the poster. I just wish I could have gotten someone to tell me this before I wrote the thing. I asked repeatedly and was unable to get any useful information about what they were looking for in poster submissions.

Day 1 (after two days of tutorials) kicks off with a keynote address from Tim Berners-Lee. They're doing it in a ballroom with everyone seated at tables, which makes it feel a lot more crowded than it actually is. Still, it looks like there's about a thousand people here, give or take three hundred.

There are a few suits before Berners-Lee including someone from the ACM (a cosponsor I assume) pitching ACM membership, someone who brings up 9/11 for no apparent reason, and someone from the Mayor's office. (The Mayor is sorry he couldn't be here. We're very glad to have you here. Technology is important. He proclaims today to be World Wide Web Day. Yadda, yadda, yadda...)

OK, Berners-Lee is up now. He wants to randomly muse, especially on top-level domain names and the semantic web. He thinks .biz and .info have been little more than added expense. He doesn't see why we need more. .xxx is wrong place to put metadata about what is and is not porn. People have different ideas about this. "I have a fairly high tolerance for people with no clothes on and a fairly low tolerance for violence." .mobi is also misguided, but for different reasons. Shouldn't need a different URI for mobile content. Instead use content negotiation (my inference, not his statement). Plus it's not clear what a mobile device is: no keyboard? low bandwidth? These issues change from device to device and over time. The device independence working group is addressing these, but the problem is more complicated than can be fixed by adding .mobi to the end of a few URIs. The W3C site works pretty well on cell phones. CSS is a success story we should celebrate.

On to the semantic Web. This actually helps with the mobile device problem by shipping the real info rather than a presentation of the real info. The user interface can be customized client side to the device. MIT's Haystack is an implementation of this idea. He wants to remove processing instructions from XML and bags from RDF (not that he really plans to do this or expects it to happen). His point is that we have RDF even if the syntax is imperfect. (That's an understatement.) It's time to start using RDF. The semantic web phase 2 is coming. I didn't realize phase 1 was finished or working. I guess he sees phase 1 as the RDF and OWL specs. Phase 2 is actually using this stuff to build applications. I'm still a skeptic. The semantic web requires URIs for names and identifiers. To drive adoptions, don't change existing systems. Instead use RDF adapters. Justify on short term gain rather than network effect. We have to put up the actual OWL ontologies, not hide them behind presentational HTML. Let a thousand ontologies bloom. We don't need to standardize the ontolgies. It's all about stitching different ontologies together.

I found a power plug, so I should be able to keep this up through at least the next couple of sessions. Unfortunately, the wireless network in the current room is having DNS troubles (or at least it is with my PowerBook; others seem to be connecting fine) so you may not read this in real time. :-( The XML sessions this afternoon are in a different room. Hopefully the wireless will work there.

As usual at good conferences, there are a lot of interesting sessions to choose from. I decided to sit in the security and privacy track for this morning. There appears to be only one half-day track on XML, happening this afternoon.

The session has three talks. First up is an IBM/Verity talk on "Anti-Aliasing on the Web." by Jasmine Novak, Prabhakar Raghavan, and Andrew Tomkins (presented by Novak). The question: Is it possible to discover if an author is writing under multiple aliases/pseudonyms by analyzing their text? Stylometrics uses a large body of text figure out who wrote what. This goes back at least 40 years. Think of the outing of Joe Klein as the author of Primary Colors, but there are also cases where this technique has failed. Traditionally this uses small, function words like "but", "and", etc. that are topical-vocabulary independent. Also emoticons. She claims they achieved an error rate of less than 8% based on fewer words. Their research is based on Court TV message boards, specifically the Lacy Peterson case and the war in Iraq. They gave postings fake aliases and then attempted to reunite them. They used 50 messages, and removed signatures, headers, and other content that would clearly reveal who was who. The idea is to see if they can then reconnect the messages to the authors. Using all words proved to be the best algorithm, though in this case everyone was writing on the same topic to begin with, and the amount of text analyzed was small so the traditional reasons for analyzing by function words don't apply. They use compression theory to measure the similarity of two texts, based on optimal encodings (KL similarity).

Could this be fooled by a deliberate effort to fool it? e.g. I overuse the word use. What if I deliberately wrote under a pseudonym without using the word use? What if I used tools to check how similar something I was writing pseudonymously was to my usual writing? They have considered this, and think it would be possible.

There seems to be a big flaw in this research. First is the extremely small sample size, which is not nearly large enough to prove the algorithm actually works, especially since they used this data to choose the algorithm. To be valid this needs to be tested on many other data sets. Ideally this should be a challenge response; i.e. I send them data sets where they don't know who's who. I may be misinterpreting what she's saying about the methodology. I'll have to read the paper.

She says, this would not scale to millions of aliases and terabytes of data. In Q&A she admits a problem with two people on opposite sides of the argument being misidentified as identical because they tend to use the same words when replying to each other.

Next up Fang Yu of Academica Sinica is talking about static analysis of web application security. I'm quite fond of static analysis tools, in general. Because web applications are public, almost by definition, firewalls can't help. Symantec estimates that 8 of the top 10 threats are web application vulnerabilities. The problem is well known. You write a CGI script that passes commands to the shell, and the attacker passes unexpected data (e.g. "elharo; \rm -r *" instead of just a user name) to run arbitrary shell commands. JWIG and JIF are Java tools for doing static analysis for these problems. Yu is interested in weakly typed languages such as PHP. His WebSSARI tool does static analysis on PHP. This requires type inference which imposes a performance cost. They analyzed over one million lines of open source PHP code in 38 projects. It reported 863 vulnerabilities, of which 607 were real problems. The rest were false positives. That's pretty good. (Question from the audience: how does one assess the false negative rate?) Co-authors include Yao-Wen Huang, Christian Hang, Chung-Hung Tsai, Der-Tsai Lee, and Sy-Yen Kuo.

Halvard Skogsrud of the University of New South Wales is talking about "Trust-Serv: Model-Driven Lifecycle Management of Trust Negotiation Policies for Web Services." (Co-authors: Boualem Benatallah and Fabio Casati.) Trust negotiation maps well to state machines.

The XML track kicks off the afternoon sessions (in a room with no wireless access at all, I'm sorry to say so once again there won't be any realtime updates. Oh wait, looks like someone just turned on the router. Let's see if it works. Cool! It does. Updates commencing now.) There are about 45 people attending There doesn't seem to be a lot of specifically XML content at this show, just three sessions. I guess most of the XML folks are going to the IDEAlliance shows instead. I've seen relatively few people I recognize, neither the usual XML folks nor the usual New York folks. I do know a lot of the New York web crowd are getting ready for CEBIT next week.

Quanzhong Li of the University of Arizona/IBM is talking about "XVM: A Bridge between XML Data and its Behavior." (Co-authors: Michelle Y Kim, Edward So, and Steve Wood) So far this seems like another server side framework that decides whether to send XML or HTML based on browser capabilities. It loads different code components for processing different elements as necessary. XVM stands for "XML Virtual Machine", but I don't quite understand why. I'll have to read the paper to learn more.

Fabio Vitali of the University of Bologna is talking about

"SchemaPath, a Minimal Extension to XML Schema for Conditional Constraints"

(Co-authors: Claudio Sacerdoti Coen and

Paolo Marinelli) Before I hear it, I'd guess this is about how to say things like

"the xinclude:include element can have an xpointer attribute only if the

parse attribute has the value xml." OK, let's see if I guessed right.

They divide schema languages into grammar based (RELAX NG, DTD, W3C XML Schema, etc.) and rule-based languages (XLinkIt, Schematron). Hmm, I wonder if he wants to add rules to W3C XML Schemas? He notes requirements that cannot be expressed in W3C XML schemas:

a element cannot contain another a element, even as a descendant, not just as children)He call these co-constraints or, more commonly, co-occurence constraints.

They add an xsd:alt element and an xsd:error type. The xsd:alt element allows conditional typing

(one type if condition is true, another type if it's false.)

The condition is expressed as an XPath expression in a cond

attribute.

The

xsd:error type is used if the alternate condition is forbidden.

I need to look at this further, but this feels like a really solid idea that fills a major hole in the W3C XML Schema Language.

If I have this right, the XInclude constraint could be written like this:

<xsd:element name="xinclude:include"> <xsd:alt cond="@parse='text' and @xpointer" type="xsd:error"/> <xsd:alt type="SomeNamedType"/> </xsd:element>

This seems much simpler than adding a Schematron schema to a W3C XML Schema Language annotation. I wonder if these folks are working on Schemas 1.1 at the W3C?

Martin Bernauer of the Vienna University of Technology

is talking about

"Composite Events for XML"

(Co-authors: Gerti Kappel and Gerhard Kramler).

By event based processing he means things like SAX or DOM 2 Events.

Rules are executed/fired when triggers are detected. But DOM events are not always sufficient. He wants events that include multiple elements; e.g. an item element event followed by a price element event.

Composite events combine these individual events into the level of granularity/clumpiness appropriate for the application.

Traditionally this has been done with sequential events, but XML documents/events also have a hierarchical order. This work is based on something called "Snoop", which I haven't heard of before.

One thing I've noted is how international this show is. Despite the U.S. location, it looks like a majority of the presenters and authors are from Europe or Asia. The attendees have a somewhat higher percentage of U.S. residents, but I'm still hearing a lot of Italian, Chinese, British English, and other languages in the halls.

For the second session of the afternoon I was torn between Search Engineering I and Mixing Markup and Style for Interactive Content, so I decided based on which room had power plugs and wireless access. "Two of the presenters are the two blondest W3C staff members."

First up is the W3C's Stephen Pemberton on Web Forms - XForms 1.0. XForms had more implementations on the day of release than any other W3C spec. HTML forms are an immense success. XForms try to learn the lessons of HTML forms. To this end:

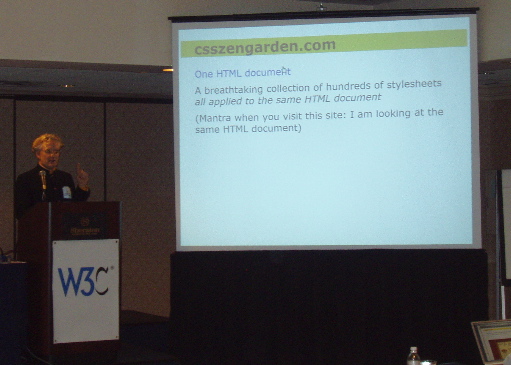

Look at CSS Zen Garden to prove you don't need to mix the document with presentation.

According to a study of 8,000 users, there are only four reasons people prefer one site to another:

For complex forms XForms adds:

XForms is more like an application environment. It could be used for things like spreadsheets.

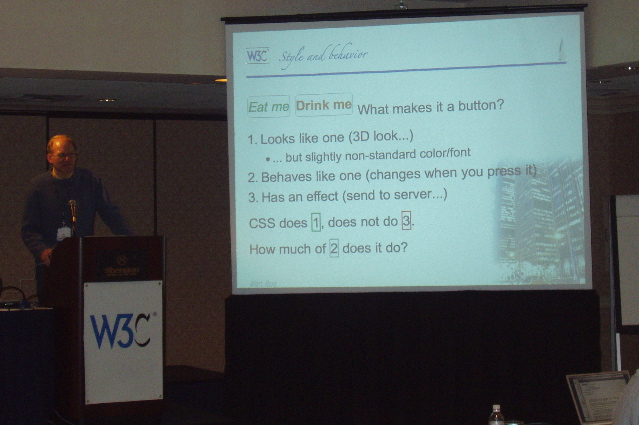

Bert Bos is talking about CSS3 for Behaviors and Hypertext. Should we style user interface widgets such as buttons? probably not. Users won't recognize them, and they'll be ugly. But we might want to blend it in with the site's CSS.

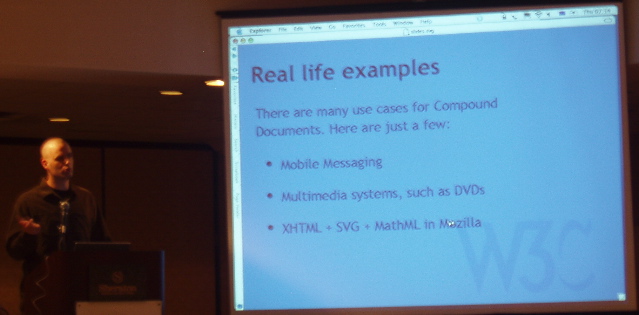

Dean Jackson closes the day's talks with Mixed markup; i.e. compound documents like an XHTML+SVG+MathML document or XHTML+XForms. No browser can handle this, so he had to write his own for this presentation. He uses SVG as a graphics API and maps HTML into the SVG graphics. The Adobe SVG viewer 6 preview is required to display this. edd (Dumbill?) on IRC: "every year I rant that I can't see SVG, every year I'm told it'll be better :( no dice." According to Jackson, the real problem is authoring these compound documents, and writing a DTD for them is painful.

I'm going to go look at the posters and the exhibits now. More tomorrow.